How to read files on SharePoint Online using Azure Data Factory

Organizations that have moved to Office 365, maintain few key data points on excel files/SharePoint Lists stored in SharePoint Online. If the situation demands you to analyze these data points, it has to be consumed to a database or a data lake. In this article, I would be sharing my experience on consuming an excel file into an AzureSQL using Azure Data Factory, which is updated daily on a SharePoint Online folder.

Pre-Requisites

Azure Data Factory Service

Azure SQL DB

Linked Service Created to AzureSQL DB (Link)

The most decisive step of this exercise is to create an app registration. It ensures the connectivity of SharePoint Online and Azure Data Factory via Azure Active Directory.

I would recommend you to use an Office 365 Service Account. Using an individual email account would impact the app registration in the event of that particular person resigning from the company and the infrastructure team removing him/her from the Active Directory.

As the initial step log into the Azure Portal using the service account and search for the app registration. Create a new registration then fill in the values.

Once you create the app registration, please make a note of the below parameters.

Then select Certificates & Secrets of the particular app registration and create a new client secret. Please remember to note down the value of the secret as Azure will automatically mask it when you refresh the screen. In case you missed to note it down you can create a new client secret.

Grant SharePoint Online site permission to your registered application afterward. This step needs to be performed by a SharePoint SiteCollection Admin. He/She needs to navigate to the below link and fill in the values.

Replace your organization-related values accordingly.

HTTP://[your-org-sharepoint-tenant-name].sharepoint.com/sites/[sitename]/_layouts/15/appinv.aspx

If you fill in the (Application /Client) ID with the previously noted down value and hit lookup few values will be populated automatically.

Fill localhost.com for the App Domain and permission request XML with below. This grants read permission to the particular app registration.

<AppPermissionRequests AllowAppOnlyPolicy=”true”>

<AppPermissionRequest Scope=”http://sharepoint/content/sitecollection/web" Right=”Read”/>

</AppPermissionRequests>

When you proceed to the next step, it will prompt you to trust the app, do trust it.

Now all the preliminary work is completed. Let us move onto the Azure Data Factory to author the pipeline.

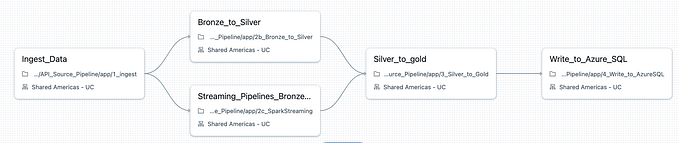

The pipeline consist of a WebActivity and a Copy Activity. We use the web activity to make the HTTP request and capture payload using a Copy activity.

Web Activity parameters

- URL:

https://accounts.accesscontrol.windows.net/[Tenant-ID]/tokens/OAuth/2. Replace the tenant ID with previously noted down values. - Method: POST

- Headers:

- Content-Type: application/x-www-form-urlencoded

- Body:

grant_type=client_credentials&client_id=[Client-ID]@[Tenant-ID]&client_secret=[Client-Secret]&resource=00000003-0000-0ff1-ce00-000000000000/[Tenant-Name].sharepoint.com@[Tenant-ID]. Replace the client ID, client secret, tenant ID, and tenant name.

Now we need to chain it with a Copy activity with HTTP connector as a source in order to copy SharePoint Online file content.

How to create an HTTP LinkedService

HTTP LinkedService parameters

- Base URL:

https://[site-url]/_api/web/GetFileByServerRelativeUrl('[relative-path-to-file]')/$value. Replace the site URL and relative path to the file. Sample relative path to file as/sites/[your-site-name]/Shared Documents/Exceptions.xlsx. - Authentication type: Anonymous (to use the Bearer token configured in copy activity source later)

Let’s create a source dataset using the HTTP linked Service. Remember to fill in the sheet name or the index of the sheet(0..n).

Fill in the copy activity parameters with the below values.

Request Method : GET

Additional Headers: @{concat(‘Authorization: Bearer ‘, activity(‘WebAct’).output.access_token)}

As the final step, we should create the output dataset for the AzureSQL sink.

Note

Make sure you have the identical schema of the particular excel sheet data on the database table as well. In the copy activity, we do not import the schema mapping.

When the pipeline executes, the excel sheet data will be populated in the table. Hope this article would help you as a quick start guide on how to use the Azure Data Factory to consume files on SharePoint Online.

References

https://docs.microsoft.com/en-us/azure/data-factory/connector-sharepoint-online-list

https://docs.microsoft.com/en-us/azure/data-factory/connector-http